Explainability in AI: Why Grad-CAM Matters in Medical Imaging

- Neuroflux Team

- Mar 29, 2025

- 3 min read

Throughout AI in medicine, a critical question continues to hinder adoption: How can healthcare professionals trust what they can't understand?

This is particularly relevant in high-stakes specialties like neuro-oncology, where AI decisions directly impact patient care. Today, we're demystifying one of the most powerful explainability techniques in Neuroflux, our brain tumor segmentation model: Gradient-weighted Class Activation Mapping, or Grad-CAM.

The Black Box Problem in Medical AI

Imagine being a neurosurgeon planning a delicate procedure to remove a glioblastoma. An AI system provides a segmentation of the tumor, but offers no insight into why certain areas were classified as tumorous.

Would you confidently base your surgical approach on this recommendation?

That scenario just illustrates the "black box problem" that has limited the clinical adoption of many powerful AI tools. Traditional deep learning models operate as inscrutable, almost unreadable systems that provide outputs without explanations. Medical professionals have to take their conclusions on faith in the training process alone.

This opacity is further complicated by potential bias in training data, where models trained predominantly on specific demographic groups may perform inconsistently across diverse patient populations.

For understandable reasons, this lack of transparency creates significant barriers to trust and implementation in hospital settings where accountability and explainability are non-negotiable requirements.

What is Grad-CAM?

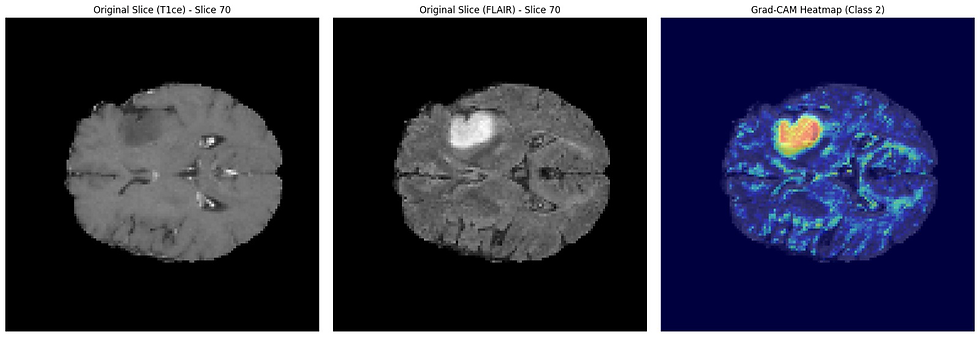

Grad-CAM is a crucial solution to this transparency problem, creating visual "heatmaps" that highlight exactly which parts of an image influenced the AI's decision-making process.

Here's how it works in Neuroflux:

When an MRI scan is being processed, Grad-CAM tracks which features in the image most strongly activate neurons in the final layers of the network

These activations are weighted based on their contribution to Neuroflux’s final decision

The resulting weights are projected back onto the original image as a heatmap

Areas with warmer colors of reds and yellows indicate regions that strongly influenced the model's classification of tissue as tumorous

Almost like a window into Neuroflux, Grad-CAM allows us to "look inside" the AI's decision-making process, to provide crucial visibility that builds trust with clinical users.

Grad-CAM in Action: A Visual Example

Here, you can see how the heatmap doesn't just highlight the tumor itself but also reveals which specific features contributed to the Neuroflux's segmentation decision. This additional level of detail provides clinicians with valuable additional context that enhances and reassures their diagnostic assessment.

Why Explainability Boosts Clinical Trust

The implementation of Grad-CAM in Neuroflux addresses several critical needs in clinical settings:

Verification

Clinicians can verify whether Neuroflux is focusing on medically relevant features rather than artifacts or unrelated patterns. If the heatmap highlights areas that don't align with clinical knowledge, the segmentation can be appropriately questioned.

Reconciliation of Disagreements

When the Neuroflux's segmentation differs from a clinician's assessment, the heatmap offers a starting point for resolving the discrepancy, potentially revealing features that human observers missed.

Documentation

The heatmaps provide objective documentation of the reasoning behind Neuroflux’s diagnostic decisions, which can be included in patient records and used in multidisciplinary discussions.

Beyond Tumor Boundaries: The Broader Impact

While our primary focus with Neuroflux is accurate tumor segmentation, the Grad-CAM visualizations sometimes reveal insights that extend beyond simply defining tumor boundaries:

Patterns of infiltration that might inform surgical approaches

Subtle features that could suggest specific genetic subtypes of glioblastoma

Early indicators of treatment response when comparing serial scans

By making these insights accessible and understandable, Neurofluxtransitions from a mysterious algorithm to a valuable clinical partner that enhances rather than replaces clinicians’ expertise.

The Future of Explainable AI in Neuro-Oncology

Looking forward, additional explainability techniques that will provide even more comprehensive insights could be further integrated into Neuroflux

These approaches, combined with our existing Grad-CAM implementation, are creating a new paradigm for explainable AI in medical imaging—one where clinicians and algorithms work together ethically to improve patient outcomes.

By making the invisible visible, Grad-CAM is bridging the gap between advanced AI capabilities and the practical requirements of clinical medicine.

To explore Neuroflux and its Grad-CAM capabilities, visit our GitHub repository or contact us.